Articles and Blog

On

Looting: To Address It or Not

A Fifth of Bootheaven - Dual Boot is for Tourists!

Attribution Dice (The

Mobile Twist)

Masque

of the Green Death, or, Those Old Coronavirus Blues

HOWTO:

LUKS Encryption in Ubuntu 18.04

A Brief Exemplar of Social Engineering

Memo

to Google (an Ongoing Series)

Big

Data Forecast for the Week

Marshmallow's Terrible, Horrible, No Good, Very Bad MP3 Player

HOWTO:

S/MIME Setup in Thunderbird

CompTIA Security+ Certification Study Notes

Where

ideas come from (and where they go)

Diet

BOINC: A Screen Saver Module

Bitcoin:

Observations and Thoughts

Fun in

the Sun: A Solar Powered Laptop

LAMP, the Linux and Everything

Tweons:

Horribly Helpless Twitter Peons

WordPress

Conversion - Episode III: A New Nope

WordPress Conversion - Continued

WordPress

Conversion - Prologue

The Sony Hack, Strategic Questions and Options

The

Human Factor in Tech Models

HOWTO: Linux, Chromium and Flash Player

To

Kill a Mockingbird, Once and Only Once

Kill Switches and Other Mobile Realities

HOWTO: Automate temperature monitoring in CentOS Linux (a/k/a Build your own Stuxnet Day)

Wallpaper,

Screensavers and Webcams, oh my!

HOWTO: Run BOINC / SETI@Home over a Samba Server

Proper

Thinking about Computer Privacy Models

Philosophy of Technology (Kickstarter project)

Repetitive

Motion Injuries and the Computer Mouse

HOWTO: Set up a Static IP on Multiple Platforms

HOWTO:

Check if your Windows XP computer can be upgraded to

Windows 7 or Windows 8

Tweeting This Text and That Link (tweet2html.py)

Deputy

Level Heads Will Roll - The Obama IRS Scandal

Kids and Personal Responsi-woo-hoo (on Reverse Social Darwinism)

Learning New Subjects on the Cheap

The

End of Life (of Windows XP)

Women's Magazines: In a Checkout Line Near You (for International Women's Day)

HOWTO: Blackberry as Bluetooth Modem in Linux

Mandiant

on Advanced Persistent Threats

Examining Technological Vulnerability

HOWTO: Install WinFF with full features in CentOS Linux

The Age

of the Technology License?

Information

Systems: Where We are Today

Big

Business Really Is Watching You

Where are the UFOs?

With the upcoming US government report on UFO sightings over the years and what they mean or not, it is perhaps time to review that wonderful gem: Drake's Equation.

Drake's Equation is stated thus:

N=R*fpneflfifcL

N = The number of civilizations in the Milky Way galaxy whose electromagnetic emissions are detectable.

R* = The rate of formation of stars suitable for the development of intelligent life.

fp = The fraction of those stars with planetary systems.

ne = The number of planets, per solar system, with an environment suitable for life.

fl = The fraction of suitable planets on which life actually appears.

fi = The fraction of life bearing planets on which intelligent life emerges.

fc = The fraction of civilizations that develop a technology that releases detectable signs of their existence into space.

L = The length of time such civilizations release detectable signals into space.

(source: Space.com, https://www.space.com/25219-drake-equation.html)

I've always especially liked Drake's Equation because it looks scientific (and it is), yet at the same time, the point of the equation not to predict whether there is life out there, but rather to demonstrate that we cannot know based on the information that we have now.

Consider:

R* = The rate of formation of stars suitable for the development of intelligent life.

UNKNOWN. Stars form differently, we believe that much. Some stars have too much gravity to support a planetary system, some have too much radiation. Nor are we remotely near surveying all stars.

fp = The fraction of those stars with planetary systems.

UNKNOWN. At this point we can only make a best guess based on telescopic observation of change in light around stars to guess whether there is anything revolving around those stars. Also, as above we are not remotely near to having surveyed all stars we can see, let alone planets.

ne = The number of planets, per solar system, with an environment suitable for life.

UNKNOWN. If there are billions of stars, and we cannot survey all of them, that number increases exponentially when we consider all of the planets to be surveyed, even assuming that we have the technology to make meaningful observations.

fl = The fraction of suitable planets on which life actually appears.

UNKNOWN. There is another principle called the Goldilocks Principle which says that not only must a planet be at a distance from its star that life can form, but in fact it must remain at such a distance (not too hot, not too cold, but just right) for the unknown but bogglingly long time (allowing for asteroids and other life killing disasters) necessary for life to evolve.

fi = The fraction of life bearing planets on which intelligent life emerges.

UNKNOWN. We have one example to draw on: Earth. Not only is one example a ridiculous metric for extrapolation, but in our case, intelligent life is only believed to have developed after the fortuitous extinction of the dinosaurs.

fc = The fraction of civilizations that develop a technology that releases detectable signs of their existence into space.

UNKOWN. Since we only have one example to draw on for extrapolation, Earth, and our own history includes an extinction of life before intelligent life evolved, and because the prior extinction was based on a random element (an asteroid), even if intelligent life has evolved elsewhere and will be inclined to communicate, it may currently be in the equivalent of ancient Rome or the Middle Ages, or even millions of years behind us.

L = The length of time such civilizations release detectable signals into space.

UNKNOWN. Since we can know that we cannot know the place in the timeline of evolution a theoretical civilization is at, we cannot know whether they have already sent signals, or whether they are dozens or hundreds or thousands or millions of years from developing to that point. Also, again based on the one example that we do have with regard to sending detectable signals, we can assume that these signals may take centuries to be detectable once they are sent, and may in fact be in a format we are unable to detect when they do arrive.

In short, shy of a “Klaatu Barada Nicto” moment, there is no proof either way that aliens exist. Whatever you want to believe, fill in the probabilities above as you are inclined, and believe what you will.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

First Amendment and News

June 6, 2021

I could spend the rest of my life writing on the subject. With degrees in liberal arts and computer science and a penchant for political science, I see more and ask the questions, that, if I had 30 less IQ points, would never occur to me. All of this to say that in the end, like it or not, technology is affected by politics. So, how to address this reality? One can mourn over the connection or face it head on. In the end, the correct approach is somewhere in the middle.

Back in the day, I would leave work and often go to a local restaurant which had several factors in its favor. It served a slightly above average bowl of chili, it offered unlimited and very good black coffee, and it had a newspaper machine outside. I would take at least an hour (and tip for the privilege) sucking down coffee and chili, and reading the paper.

Having read my share of Supreme Court decisions in college, it would never have occurred to me that the concepts of being able to read the news and to do so without unnecessary government interference was NOT a First Amendment issue. Of course it would be! There is no point to having the right to report the news if there is not a corresponding right to consume the news.

Recently the FBI applied for a subpoena (quashed) to obtain the IP addresses of all people who read a certain USA Today news article. To a tech educated thinker this was evoked several responses.

- It was not surprising. The information exists, and someone (or more than one someone) will eventually seek to abuse it.

- This concept has existed for years, and has always been problematic. Imagine the person who went from paper newspapers to a first smartphone, and was required to sign in to read the news. 'Wait! You are now going to track, like track-track, what I read?' The younger generation has no basis for comparison unless they are very well read, and being well read these days is both an unpopular and politically questionable position with implications of racism thrown in for good measure.

- The government already tracks what you read in any case. This is not a tinfoil hat conspiracy theory, this is verifiable by anyone who cares to take the time to do so. I cleared all cookies, passwords and other PII from a browser and loaded a mainstream media site. I glanced over the front page and clicked on a tech article about tracking and privacy for added irony, and read that as well. Then I took the browser offline and examined the cookies the site set. Two of the cookies the site set in that short session were for non-US servers, which means that NSA already sucked up the information about who read what.

Given that this information is already recorded, and has been for some time, what is the point of the FBI subpoena? Perhaps not so much to acquire information that they already have or could have, as much as a warning to the public about what not to read. It's a far cry from being able to freely and anonymously consume news. As always, the resolution will finally be political, not technical.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

On Looting: To Address It or Not

June 4, 2021

The state of technology as it exists today suggests that this is wholly preventable with proper inventory control, and if this is so, would greatly reduce the value of looted items as personal use or resale items, which in turn would reduce the incentive to loot in the first place. A good example of this technology in action is Apple products. In the wake of a New York City looting episode, Apple announced that stolen phones could not be activated, and the question a serious technophile might ask is how Apple could make good on this advisory.

The answer is both technology and business control related, simple enough to implement, and properly applied, need not violate any (rightly) cherished American concept of privacy.

Let's consider two big ticket items of varying functions: a mobile and a smart television (this could apply also to personal computers, tablets or Bluetooth devices just as easily). These items (AS THEY ARE SOLD TODAY) have something in common, that is, they have a serial number programmed into them, and the serial number appears (or could) both in the internal programming of the device itself, and on the packaging of the unit.

This last is important: it suggests that the device serial number is readily available without breaching the consumer packaging. Think about the last high ticket item you purchased (or look at the packaging if you retained it). There will be a coded label which contains the model number, but also a unique serial number for that device. On the packaging. Outside of the box containing the actual product.

Any business which bothers to take the time and effort to do so can scan the bar- or QR- code of all devices which it takes into inventory and create a database containing the serial numbers of every device it currently holds. Upon sale of a given item, the serial number of that item can be removed from inventory. Not only is this not even remotely difficult, inventory control is the very reason that the outside of the box has a model/serial number label in the first place.

So, assuming a company takes 10 items into inventory, serial numbers 1 through 10, simply scanning each item for serial number should update the database with serial numbers accordingly. Companies already do this to record and track model inventory. Let's say that a business buys 25 model AT250 computers. The business needs to be able to track the inventory of model AT250 for its own business model purposes: that is, how well is AT250 selling and when or should we bring in more? Recording the serial number as the item is checked in to inventory, and, as importantly, removing that item and its serial number from inventory as it is sold is, or could be, a basic business model function.

So let's consider the implications as regards looting. In our connected society, the serial number of a device can be checked any number of times. A serial number can be checked

- in inventory control, that is, when the device is received as part of a merchant's inventory

- at checkout, that is, when a device is sold and ceases to be part of inventory

- at use, that is, when the device is registered on the internet or other networks

This last has many implications. Many people do not know this but every call on a mobile device, every ringtone, every app download can and usually does record the unique device identifier (that is, serial number) of the connected device. The same is already or can be true of every computer or smart television. Simply put, for the device to be fully functional, it must at some point, connect to a larger network.

Now suppose that company A has ten computers in inventory. It sells three, removing those devices from inventory every time a sale is made. That leaves seven highly and uniquely identifiable devices in inventory. Now assume a riot and attendant looting causes those remaining seven devices to be stolen. Company A has lost seven highly identifiable and unique devices from inventory. These devices are identifiable by model (since our Company A files an insurance claim based on known lost inventory, this is a given). Stolen items can just as easily be identified by serial number.

Neither Company A nor the police, nor the insurance can say where a looted device is in time and space, but they can know that, to be fully functional, the device must, at some point, register with some online system somewhere. A cable provider, an ISP, a mobile network, an app store. At SOME POINT, to be fully usable, a device must register with some network somewhere.

If a device must register somewhere, it can be prevented from doing so. If a company has the serial number of looted devices, it is a relatively minor technical exercise to prevent this registration. Perhaps the device can be made to verify itself with the manufacturer itself, perhaps the merchant or app market can perform the verification. Perhaps our Company A's insurance carrier insists on it or does it itself. Either way it's a minor technical exercise if the will to do it exists.

Now consider the implications if such a device is looted. Where can it be sold? Where can it be used? If the practical answer is 'nowhere' then the looted device is useless. It cannot be sold in the streets, it cannot be sold online. An individual user will not buy if the device is, or probably is, disabled, an online facilitating merchant like eBay or Amazon will not permit it to be sold if they have to get involved with satisfying a deceived customer. Social justice has its points, but in the end, to invoke Don Corleone, business is business.

There is the naivete question to consider. I am both American and technologically and politically educated. I appreciate the potential for abuse of such a far reaching system. I get it, perhaps more than most people. But, as Tik-Tok and Huawei can be prevailed upon to behave themselves despite their default tendency not to do so, so can any vendor or merchant. The solution is a political, not a technological one.

In the end, this is a question of will more than skill. The technology exists, and merchants (such as Apple, as we have seen) can implement it. It only remains for merchants (or their insurance carriers) to require it to be used properly and on a vendor and product neutral scale.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Everybody Plays the Fool - Election 2020

August 28, 2020

I’m from Chicago. Political shenanigans and outright fraud does not surprise me, in fact it amuses me in a been-there-seen-that sense. Seeing Chicago style politics come into its own on the federal level doesn’t so much inspire outrage (although I’ll admit that it should). Rather, it inspires an amused chuckle and analysis for the next step resulting from the first, etc. On that basis, here’s my analysis which concludes with a Biden victory.

This election will be too close for a landslide victory on either side. The opposing philosophies are too drastically in opposition for there to be much crossover. This election as in all presidential elections, but perhaps more so in this case than in the past, will be decided by the swing voters. This is at the balloting level. Enter Chicago style politics.

In addition to an already close election, coronavirus will either cause people not to vote or to vote by mail. Voting by mail is rife with opportunities to cry foul. Absentee ballots by registered voters are considered valid, legal and trustworthy, but in this election absentee ballots are augmented in some states by generic mail in ballots which is not the same thing. And it’s a reason for either party to challenge election results in the courts.

It can be argued that the United States Postal Service practices may impact balloting results. This is neither here nor there, there are valid reasons why the postal service has reduced capacity. This includes adoption of online communications and commerce by one time postal service customers resulting in greatly reduced demand for first class mail, and competition for the remaining business by commercial shippers. Nonetheless, the postal service, while not technically a government agency has government ties. Any perceived inefficiency in handling of ballots is another reason to appeal election results in the courts.

Donald Trump has taken a wait and see attitude regarding the validity of election results. Hillary Clinton has suggested that Joe Biden not concede on election night should he apparently lose. Both sides in fact appear to be gearing up to challenge the election results. Being a federal election, either side losing at a lower federal appellate level will appeal to the next higher level federal court until, finally, they arrive at the Supreme Court. Barring a landslide, which won’t happen, both sides will determine rightly that they have everything to gain and nothing to lose by appealing to the very end.

So, the Supreme Court in 2020. The Court has a slight but real liberal bias at this time. Justices typically tend not to retire just prior to a presidential election, being aware that the current administration nominates a successor. If a conservative justice were to retire prior to the election the best a conservative administration could hope for is to maintain the status quo. If a liberal justice were to retire, they must be aware that they risk changing the balance of the Court at a politically delicate moment. Therefore it is safe to assume that there will not be a change in the makeup of the Court prior to the election.

My conclusion is that in a hotly contested election with not one but several potential legitimate causes for appeal to the courts, and with the final court of appeal being a slightly liberal leaning Supreme Court, this slightly liberal Supreme Court will ultimately decide the election. Conservatives have been shocked more than once to see Justice Roberts side with the liberal side in Court decisions. Given the vagaries of the arguments which will ultimately be presented to the Court, there are abundant opportunities to decide an election outcome according to political preference, thus the election outcome will ultimately be political, philosophical and most importantly, decided by a liberal leaning Supreme Court.

I am not political in the sense that I do not vote. I enjoy political science far too much to stake out a personal position in elections. For me it’s about the entertainment value; I have a philosophy degree, what can I tell you? As such I am not endorsing or supporting either candidate. This is not a political position; it is the joy of the journey there in my case. With that exception duly observed, and based on the above analysis, I am calling the election for Joe Biden as certified in the United States Supreme Court.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Attribution Dice (The Mobile Twist)

May 18, 2020

The one detail I will mention concerns an analyst whom, when asked about attribution for the cyber attack under investigation, produced a set of dice with various possible parties, methods and purposes printed thereon, and suggested that if the interviewer rolled the dice, the result would be as good a guess as the analyst or anyone else could probably provide.

Considering this argument, it makes a lot of sense. Suppose a French speaking hacker uses American Vault 7 code for his malware and for the purpose of obfuscation throws in some German comments or variable names, said code then analyzed by a Russian. In reality, this code could variously be attributed to or interpreted by a Frenchman, an African, a Canadian, a German, an American or a Russian, to say nothing of any of their respective governments. Or all of the clues could be red herrings.

I found this so amusing -and completely believable- that I decided I needed a set of attribution dice for my very own. Sadly, attribution dice are hard to find as physical items. They appear to be made by hand in small quantities, very much a niche item, and I was unable to find a set. Which left me the option of making a set of my own.

Given my personal areas of skill (and lack of same) if was far easier to take a picture and photoshop it, and create digital attribution dice than it would have been to create the physical item. Having made a decent Android app, it was a small step to make it available on Google Play.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

An Open Letter to US Bank (in the time of the Plague)

April 8, 2020

As you bask in abundant cell signal in Minneapolis, take a moment to consider rural America. There are vast swathes of the United States, now, in the year 2020, which have little or no cell signal. In a motivation near and dear to a banker's heart, it's not cost effective to put cell towers up in rural areas. The claims by many mobile carriers of high percentages of coverage are a marketing gimmick; in fact they cover this percentage of the population in high population density areas. And yet seniors and the disabled, living on fixed incomes, do not always live in these high density population areas, especially considering the increased cost of doing so.

I set up one of these seniors living in rural America with a rather powerful Android tablet, which she has learned to use. It has any and all of the features, functionality and power of an Android phone, except mobile signal. This is appropriate because there is no mobile signal available at her rural American home. It does have 5 GHz wifi, Google Play, a high end camera, in fact everything necessary to download and run your mobile banking app for Android, including remote deposit of checks. In order to enhance the device further, Google Voice was added for texting capability.

This senior had to risk coronavirus exposure for herself and her household which includes another senior and a disabled daughter in order to deposit a paper check even though the technology was available for her to avoid this risk. The only reason this dangerous excursion was necessary was that US Bank did not permit her to sign into the US Bank app because

- US Bank did not like the Google Voice number attached to the device

- US Bank would not even make an attempt to text the number offered

- US Bank did not offer an alternative method of verification such as email, postal mail or website login

To be clear, US Bank does not ever need to text anyone. US Bank may want to text customers, good marketing it may be, but good service and responsible corporate citizenship, especially during a pandemic, it is not.

Thank you for listening.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Masque of the Green Death, or, Those Old Coronavirus Blues

March 21, 2020

And Darkness and Decay and the Red Death held illimitable dominion over all. -- Edgar Allan Poe

The penthouse of Prince Prospectus was like no other. Well secured against the masses by private security, well stocked for a prolonged stay, and well populated by minions, entertaining, enterprising, and dependent to a man on the goodwill of Prince Prospectus, did they all retreat to wait while the Green Death burned itself out. And if the wait would be prolonged due in no small part to the initial reluctance of Prince Prospectus to shut down PRODUCTION, what of it? The wine was good, the food was excellent, and the Internet and merriment went on in the domain of Prince Prospectus.

A moment should be set aside to describe the penthouse of Prince Prospectus, for indeed it was a rare and unusual abode. The public rooms were seven in number, and each was decorated in a color after Prince Prospectus’ inclinations. Many were a shade of green, from the subtle gray green of a banknote, to the lusty green of a T-Bill coupon, to the bright neon green of a stock ticker. The last was gold, which is, in the end, the final fallback of worth after all fiat money has failed, or as some wags would have it, orange as a president’s hair, but never was such wit proclaimed in earshot of Prince Prospectus himself.

Now Prince Prospectus prepared a party as the peons raided the stores for toilet paper. To be sure Stephen King never wrote about a run on that particular item, but what of it? If anyone in Prince Prospectus’ circle was so crude as to have read Stephen King, it may be certain that they kept that fact very much to themselves. And the party!

How to describe such a party as Prince Prospectus threw! Everywhere was cliche. From somewhere, a minion had procured a gi, and he was Kung Flu! There were surgeons general and wandering attorneys assuring all and loudly, that there was no liability leg to stand upon!

But whenever the stock ticker in the neon green room chimed to indicate that another hundred points had been lost in the markets, the revelers paused, their hands reflexively crept to their belts, where their mobiles reported the damage. And, after calculating the damage to their portfolios, and that of Prince Prospectus, their mobiles were put away, and the revelers glanced at one another, some altogether a delicate shade of green Prince Prospectus has not provided, and they promised themselves that the next time the stock ticker chimed its doleful message that there would be no such pause, and yet, at the next chime, hands would creep toward mobiles once again.

Now there walked through the fete of Prince Prospectus a guest whose countenance was completely obscured. Dressed in surgical green, another color that Prince Prospectus had never considered for his decor, this apparition was, and it cast quite a pall upon the revelers. When Prince Prospectus saw this reveler he was seen to pale momentarily, but Prince Prospectus was a doughty man of business, and such surety surely defined him in his own domain as well.

Well did Prince Prospectus know that forward looking statements were no guarantee of future performance! Yet the apparition in the unusual green garb was, well, disturbing. Tacky, declasse, positively inappropriate to the occasion. Thus did Prince Prospectus order the apparition seized that he may be unmasked, and given a stern talking to regarding class, taste, refinement, and the subtle but real fatality of taking a joke too far!

But the apparition was not seized. All backed away in fear of the surgical habiliments, the rubber gloves, the masked face. Prince Prospectus, seeing both the reluctance of his minions, and the source of their discomfiture, decided on the spot that the so-tasteless minion bore more than a talking to. Prince Prospectus would, by gosh, FIRE the man. And with no reference! Such discordance as the low humor displayed was enough to put one off one’s game!

Yet, at first, there were none in all of Prince Prospectus’ retinue who would seize the ill-conceived jokester. Loyalty, Prince Prospectus realized suddenly in a moment of nearly paralyzing revelation, went so far and no further, and the more intelligent the minion, the more true this would be! Still, loyalty existed, in the lowly of the low, and Prince Prospectus’ chauffeur now leapt forward, loyal to Prince Prospectus in precisely the way that Prince Prospectus was not!

As the chauffeur seized the surgical garbed figure, he struggled, not with a man, but with the collapsing clothing which held no mortal form at all! And as the clothing fell into a disorganized pile upon the floor, the chauffeur began coughing. Prince Prospectus began coughing. The retinue began coughing. Could it be...it COULD be, that a natural microorganism would be so disrespectful, so UNCOUTH, yes! as to have no respect for class boundaries! It could happen; it was happening now!

And Darkness and Decay and the Green Death held illimitable dominion over all.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Quoth the Maven, Nevermore

July 4, 2017

This injury comes as a result of a poorly designed mobile phone; poorly designed in the sense that more than one function which should not be activating by itself does so. The results escalate from nuisance harassment of emergency services to actual user injury.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

June 27, 2017

The forecast for big data is cloudy with a chance of GIGO.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

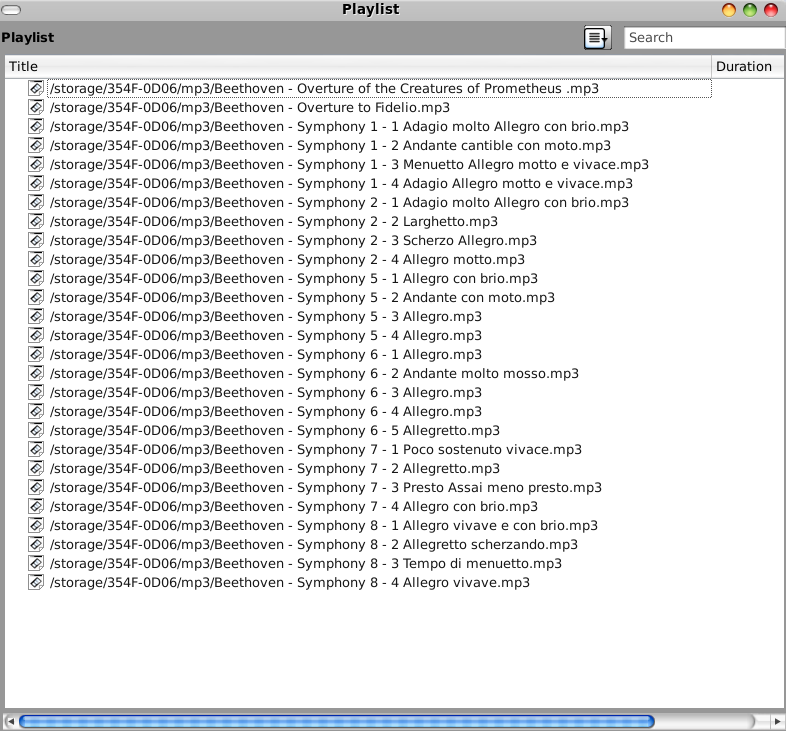

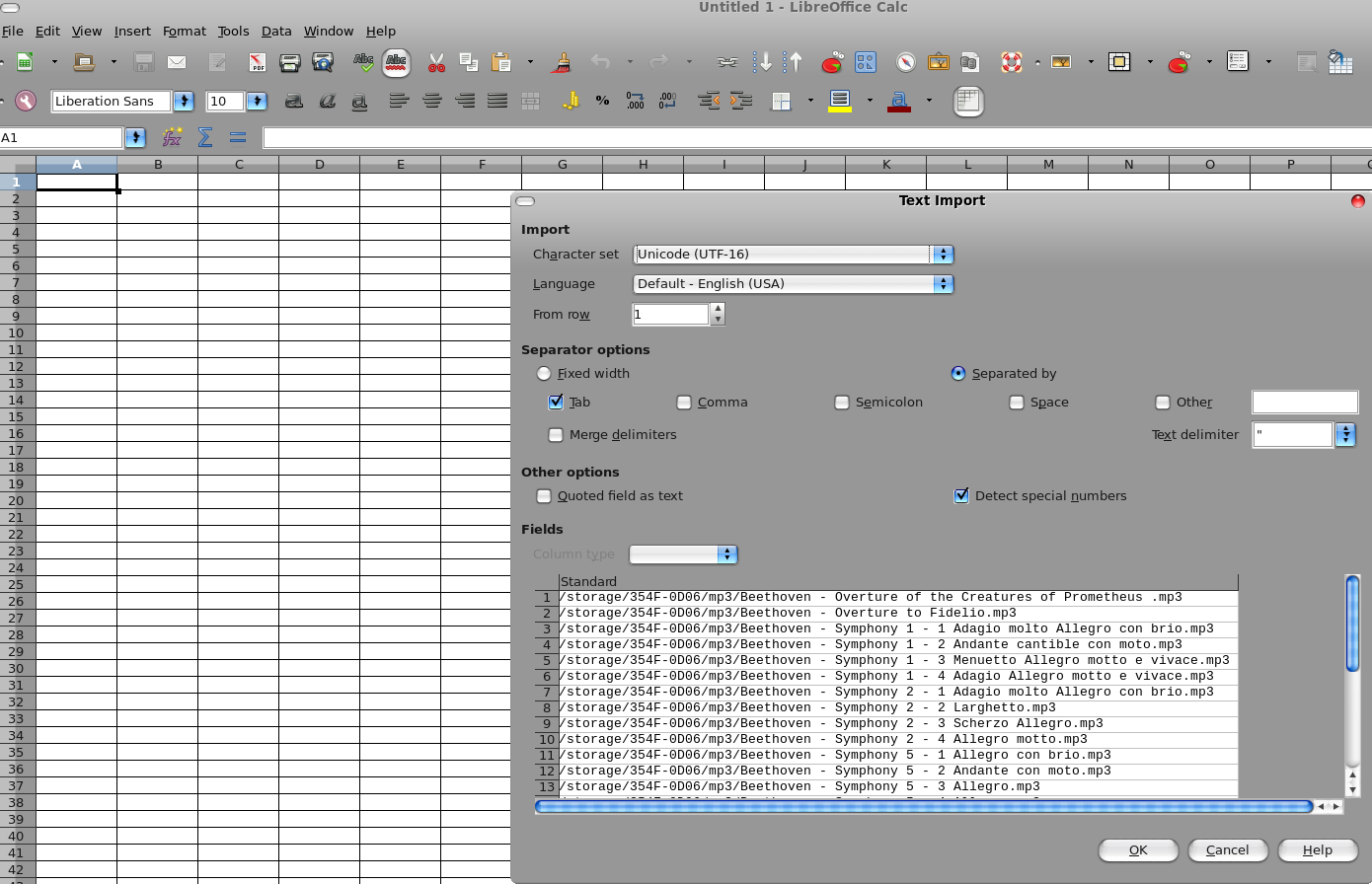

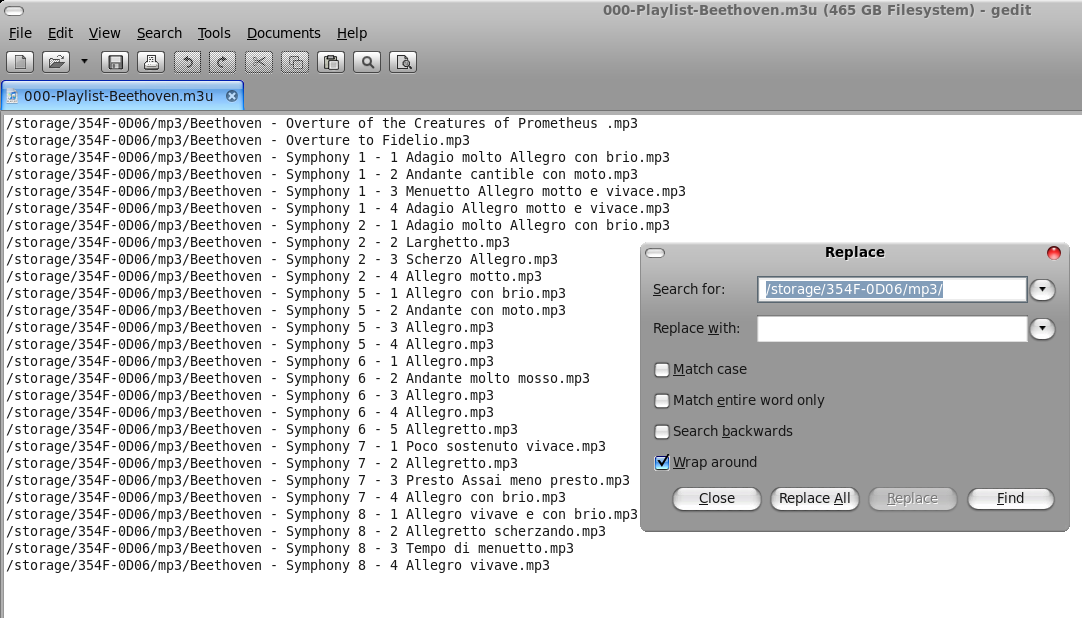

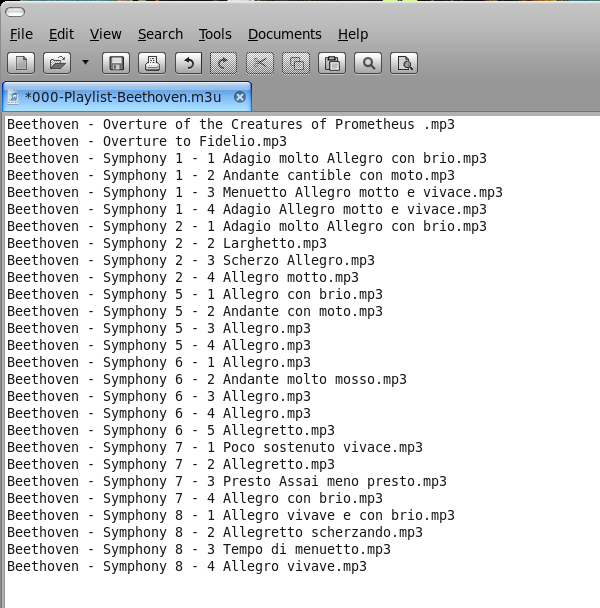

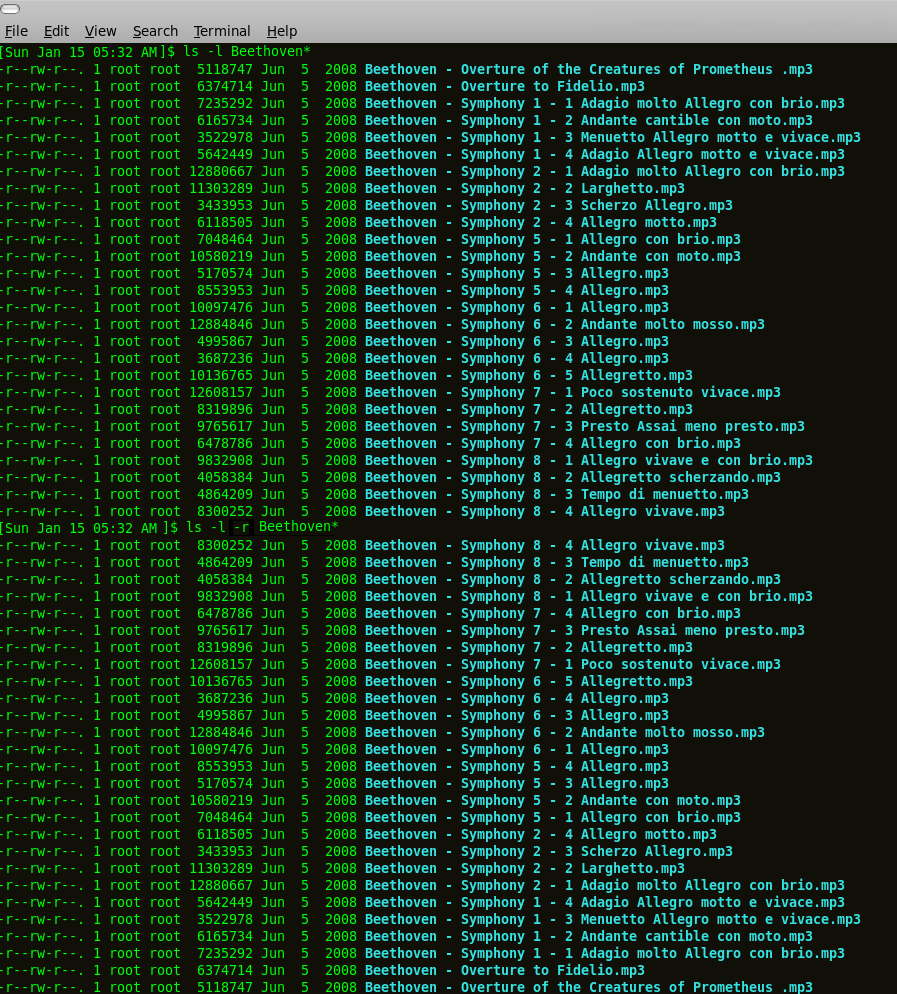

Marshmallow's Terrible, Horrible, No Good, Very Bad MP3 Player

January 14, 2017

Share this on ![]() witter or

witter or ![]() acebook.

acebook.

The (other) CSI Effect

January 4, 2016

Well, we can discuss it, but it's never going to be as fast as the computers on CSI, and it's going to cost you.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

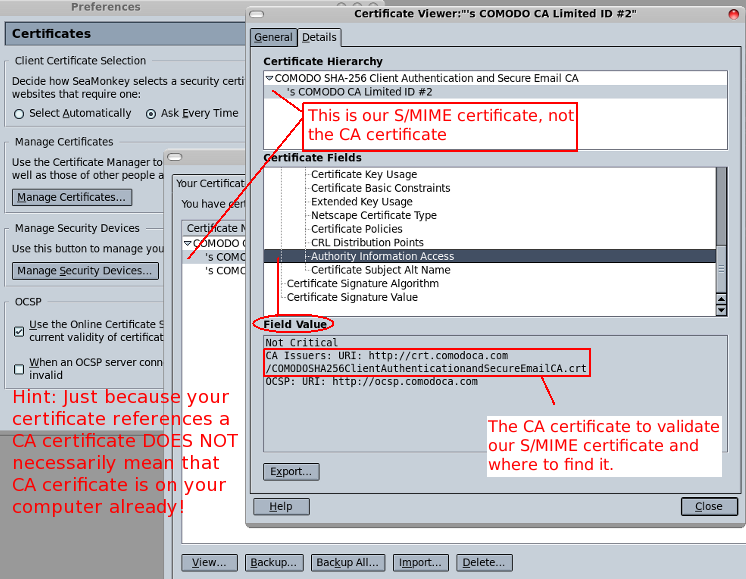

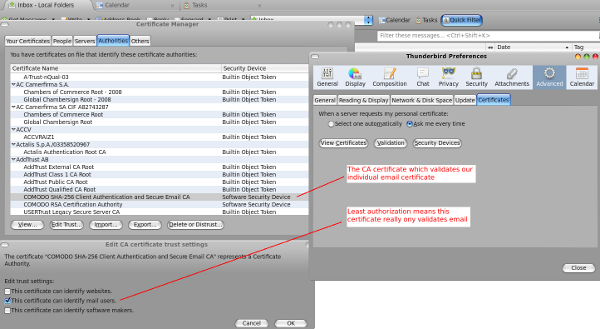

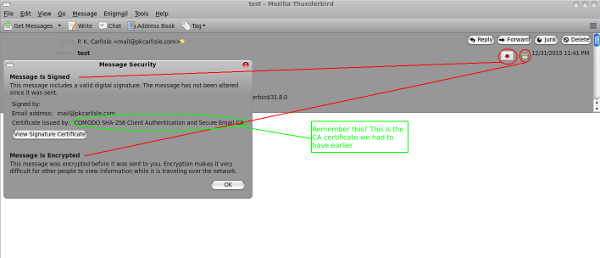

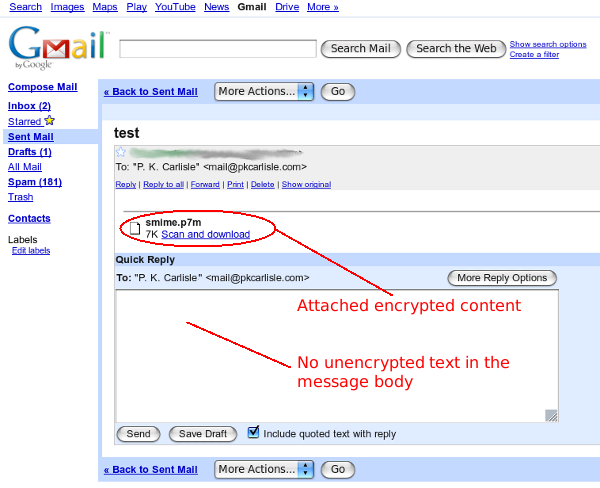

HOWTO: S/MIME Setup in Thunderbird

January 1, 2016

- Comodo (the CA) generates certificates for digital signatures and encryption of email

- CA sends a link via email in order for the end user to retrieve their certificates

- Browser gets the certificates from CA

- Browser installs the certificates to itself

- User asks browser to export certificates to user's computer

- User imports certificates into Thunderbird

That's it, you're done. You should now be able to digitally sign and encrypt email with S/MIME in Thunderbird.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

December 31, 2015

Best of luck with your exams.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Bedtime story for a puppy

September 27, 2015

(As told to my friend's new puppy)

Once upon a time...

There was a restaurant owner named Mister Vinh. Mister Vinh ran a Vietnamese restaurant, and his delighted customers would come from miles around to exclaim and marvel at the meat he served. “Mister Vinh, I don't know how you do it! I have never had meat like that before!” they would exclaim. Mister Vinh would nod and beam.

Now some say that Mister Vinh had his own idea of 'puppy chow'. Others say that's nonsense. Mister Vinh's is a puppy paradise, they say. Why, when puppies go to dinner with Mister Vinh, they like it there so much that they don't even come home. Ever. Again.

So be a good puppy and stop pooping in the hallway, because if Mister Vinh hears that you're a BAD puppy, he just may send a dinner invitation for YOU!

The End

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Where ideas come from (and where they go)

September 26, 2015

Very often in life we act and think as we do because we are following conditioned behaviors. Uninterrupted, we will continue to follow this conditioning from cradle to grave without ever stopping to consider whether there is another, better way. Often it takes a catastrophe to jolt us out of our way of thinking and make us consider such ideas as free will, and responsibility to ourselves, and to the planet, and to future generations. But you can change your life without having disaster strike, and maybe in the bargain avoid disaster in the first place.

Mickey Spillane wrote his first Mike Hammer novel in 1947. Times, values and beliefs were different. The 1940's Hammer drank whiskey from the bottle, smoked unfiltered Luckies and food was whatever he could toss into a frying pan. About one in four Americans of that and later generations would still die from heart disease or other diet or lifestyle related problems, but the data hadn't been convincingly collated at that time, and people in general had not yet made the necessary mental connections.

Fast forward to 1989, and Mike Hammer was a reformed smoker and lite beer drinker who minded his diet and worked out in the health club. This change probably reflected Spillane's own health awakening, but it also illustrates the importance of a little perception and perspective.

What is perceived as normal is normal today. It may not have been normal yesterday and will not necessarily be normal tomorrow. A hundred years ago the pseudoscience of phrenology said that you could determine personality and abilities by the shape of someone's head. A hundred years from now people of today may be perceived as being frighteningly primitive to accept either twenty-five percent mortality from heart disease or a lifestyle in which bad habits are offset and cobbled together by every type of prescription imaginable.

It is possible for one person to change their own behavior within their own lifetime. To some degree, social norms will help, for example the negative attention tobacco use receives today as opposed to forty years ago. But you cannot and should not rely on changing social norms to define your reality for you.

Entirely too often social norms are dictated by corporate or political entities which may not have your best interest at heart. Who would have thought that the expanded awareness that many of the wholly avoidable medical ills which befall Americans would be answered by a slew of pills as a lifestyle augmentation? Would it not have been expected that such an awareness would have led to changing habits instead? Unfortunately, that did not happen, but there's a lesson to be learned nonetheless, and that lesson is that only you must be the final arbiter of which social norms you believe and follow.

The philosopher David Hume argued that there may be no such thing as free will. It's a tricky proposition because, if Hume is to be believed, everything that we know and believe is a combination or extension of what we already are or know or have experienced. Even the pursuit of knowledge itself is not exempt from this reasoning. So that, if one is conditioned to believe what they are passively taught, to go along to get along, that social and political systems will always work for the greater good, then it follows that these people will be unlikely to question the status quo or to accept responsibility for their life as they know it (unless their conditioning tells them that they are responsible, of course, in which case they go straight down Alice's rabbit hole trying to make sense of the logical inconsistency).

If current social norms trend toward conformity, this leads to a flawed model in which people go to one or another extreme, of either following social norms and denying that own actions and lifestyle in any way are responsible for any resulting discomfort, or accepting that something is wrong somewhere, but desperately seeking something, anything, to blame as long as it absolves them from any need to personally take dramatic action to improve themselves. Of course, neither extreme being a particularly good choice, many people spend an inordinate amount of time trying to make sense of what society tells them steadfastly must be true, even while common sense whispers that there's something wrong with the reasoning. The worst thing of all about where this reasoning leads is that you cannot reasonably expect to change this behavior for all or even most people who accept such conditioning.

The good news is that you can change your behavior for yourself and at least hope to impact what constitutes knowledge for those you care about. The very fact that you are reading this is information. Even if David Hume was correct and we are and can only be a product of existing knowledge and experience, now that you are aware of it, you have a responsibility to yourself to expose yourself to as much knowledge as you can, in order to become the most well rounded person that you are capable of being.

Of course, it really goes without saying that you are also volunteering to be an outsider to the degree that you think for yourself, and when that thinking leads to choices outside of the mainstream. That in itself is not a bad way to live, but it is worth mentioning if for no other reason than when you first experience any such social ostracization, you really have two choices to address it: to reassess your actions and decide that they are correct after all, or to scurry back to a safe level of social acceptability and like Shel Silverstein's Giving Tree, be happy (but not really).

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

It was a pleasure to tweet

August 30, 2015

It was a pleasure to tweet. And to text and to Facebook, too.

Read a book and you spend hours of your life, and it may not even end the way you would have it end. Hours you cannot get back and no guarantee of the result. But tweet or text! Why if it doesn't work out, you've spent 140 characters of time! Not satisfied? Send another. And again, and again. Sooner or later, your fingers move of their own accord. Autonomic tweets, automatic texts.

Nobody will read them, hell, nobody can possibly read them all. It doesn't matter, in fact, it's better that way. Cognitive dissonance. Believe that your tweets matter, know that they won't be read. Good feelings without responsibility. Why, responsibility itself is fourteen letters. It uses up a tenth of your tweet, just like that! Six syllables, ridiculous. Think of all you can fit into six syllables and fourteen letters if you don't waste 'em! Now! Wow! Click! Pic! Blink! Link!

Cursive writing isn't taught in school any more. Cursive comes from the same root as discursive, discursive means a flow of ideas, cursive is a flow of writing. Cursive only matters when there is a flow of words to communicate; who knows where that may lead! Learn the physical skill and you may use it. Develop ideas. Independently, how boring. To say nothing of unsocial. And how will you ever tweet them? You won't! You would have to write a book, and there you are tying up hours, stealing hours of someone's life, and you cannot even guarantee satisfaction.

Censorship isn't real. You learned that in school. “Censorship', 'government', 'bad'. There was a test. Say the words. Pass the test. You're educated. If it is not done by a government, it cannot be censorship. Does not 'censorship' mean 'government' plus 'bad'? When the news is censored by the news sellers, why it can be anything at all! Restraint. Protection. Good taste! As long as it fits into 140 characters. Blast! Passed! Past!

Here's a secret. Back when people read and thought, they didn't necessarily learn. Uncle Tom's Cabin: People do what they will and organized religion, depending on those same people for funding justifies from the pulpit what people wanted to do anyway. A hundred and fifty years ago, and still today my God can beat up your God. The only thing worse than no knowledge is nobody learning. The only thing worse than nobody learning is when you learn alone and shout into the wind and nobody hears you. Don't learn. It's painful and frustrating. Tweet! It's neat! It can't be beat!

People with computers want to sell you things. Other people with computers want to keep you safe. Doesn't work if we're not all the same. Make everyone the same. Reduce ideas to cliches, communication to a handful of letters. If nobody knows where ideas lead, they are blank slates waiting to be told. Don't tell them too much. They're not equipped and not interested. Easy! Breezy! Pleased!

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Mark Twain and Google

July 28, 2015

Re-reading Huckleberry Finn, and never having actually precisely clarified the matter before, I decided to look up Jackson's Island to see exactly where it is on a map.

In the old days this would have to wait until I had a map or atlas available of sufficient scale as to show detail such as a small island; these days one can look at Google Maps and get a map on the same device as the book (hence my curiosity to precisely locate the landmark)...

...A map covered in a red banner with Google complaining about my tablet configuration and inviting me to start jumping through hoops (downloads, privacy policies, and the like) to see a map until it was quicker and less painful simply to give up.

Mark Twain would be perhaps unsurprised to note that human bureaucracy and confusion is keeping pace with technological progress quite nicely. Thank you, Google, for that confirmation of human nature.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Bitcoin: Observations and Thoughts

June 6, 2015

I recently had the opportunity to observe and consider some of the elements involved in the acquisition and use of Bitcoin (BTC). Other than the relatively minor positive element that using Bitcoin makes one appear to be a technical wizard with a depth of obscure knowledge, there did not appear to be much if any serious benefit to Bitcoin. From the perspective of people experienced with using, moving, and accounting for money in the real world, it's nothing new, and in some ways a lot worse. Quite possibly, that's even the point: If you are working with someone with their head in the technological cloud, they are probably less experienced with the more subtle points of money and accounting, making them comparatively more attractive targets. What follows are some of the actual elements observed.

Upwards of 40% in fees. Euphemize it, slant it, blame one party or another for fees, the reality is this: Converting US dollars to BTC and then spending them came with an average of more than 40% in fees. The euphemism chosen is irrelevant. If a credit card charged 42% to use it for purchases in another currency, how long would you continue to use it? What is paid to use a thing, no matter how the cost is categorized, is the rational basis for measurement.

Some paranoia required. It was necessary to play a drawn out cloak and dagger game, including use of once-off ciphers, and sending selfies holding identification documents to complete a BTC purchase. For a currency which is touted to be fringe and counter culture, it was a Byzantine identification process, ironically requiring identification issued by the very governments Bitcoin users by and large claim to distrust. Also, some less savory people hailing from a variety of (principally) eastern European countries fairly regularly recruit in, or come to the US and western Europe to engage in, a variety of credit card and banking fraud, siphon off a little or a lot of money, and scurry back home. Sending identification copies to the wrong person may well give whomever purchases it somewhere down the line what John Le Carre called a legend, a convenient identification to adopt on these forays.

Even once you have Bitcoin it's not anonymous. Virtually everyone who moves Bitcoin takes a slice of every transaction as a fee. Most often this process is automated, and must be in order to be practically applied. Consequently, often the Bitcoin address receiving the fee is well known (and would be, being hard coded into whatever software wallet's algorithm slices the fee off of each transaction). If you know a) the customary address or addresses receiving transfer fees, b) the amount of the fee, c) the customary fee percentage, and d) the time stamp of the fee, then it becomes a straightforward task to e) calculate the original transaction amount, f) inspect the block chain for that transaction, and g) track the transaction from start to finish. Not only can accountants do this easily, they actually like doing that sort of thing, it's their meat and drink.

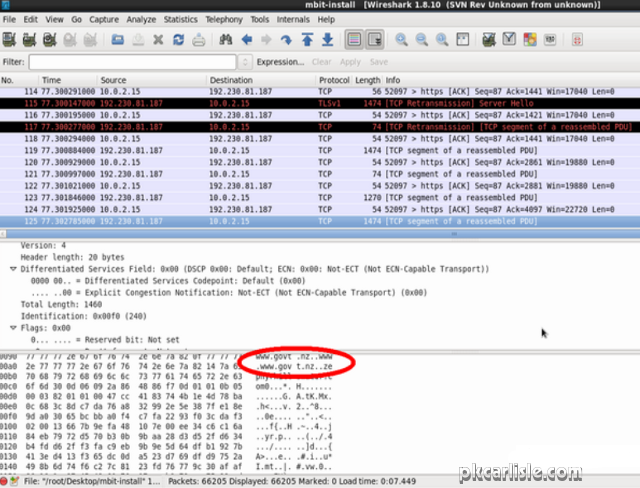

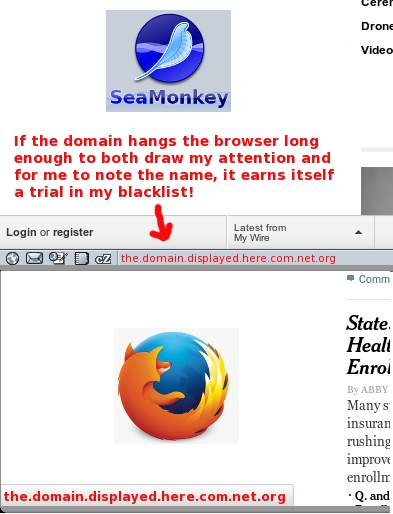

[Lest there should be any lingering question about anonymity and Bitcoin, MultiBit, a popular Bitcoin wallet, appears to say a big "hello!" to a server shared with the New Zealand government when MultiBit is installed. New Zealand, being one of the so-called Five Eyes, even installing a Bitcoin client would seem to be grounds for surveillance, and Bitcoin users might consider themselves officially on a list. This is a screen capture from a Wireshark session run while installing MultiBit.]

Not everyone cares about the anonymity of Bitcoin. To some it just seems a cool, techie thing to do. It's a fair descriptor, but at a 42% markup, Bitcoin had better be extraordinarily cool and techie. Also, with the identification process, it's fundamentally no different than using Paypal (and a lot more drawn out, dangerous, and expensive).

Bitcoin faucets today are largely confidence games. The Bitcoin faucet was originally conceived to get people to consider Bitcoin, and painlessly try it out without investing their own money. That concept has evolved into myriad sites which claim to pay fractions of a bitcoin for playing games, completing surveys, watching promotional videos, etc. There are several issues with the modern Bitcoin faucet which, cumulatively, may permit them to be safely classified largely as scams.

A bitcoin may be divided into thousands or millions of units. A faucet will pay a couple millionths of a bitcoin for completing a survey, watching a video or participating in whatever service it purveys. However, when you convert millionths of a bitcoin into, for example, US dollars, that comes to fractions of a penny for each video watched, survey completed, etc. That's a fair deal if you go into it with your eyes open. However, many faucets also have a minimum withdrawal limit on balances, meaning that for practical purposes, a user may have to spend an inconvenient number of weeks or months watching videos and completing surveys to acquire the minimum balance for withdrawal, which at that time may convert to a dollar or so.

If that isn't enough to discourage most users from sticking around long enough to actually collect, most faucets viewed pay bitcoin into their own proprietary wallets. Bitcoin is designed to not require this, which in turn indicates that this is intentionally added convolution which avoids what should be a fairly straightforward payment process. In short, bitcoin faucets appear to require considerable user interaction, while at the same time making an extraordinary effort to avoid paying amounts calculable in pennies. [In fairness, there was one site viewed which actually did, quickly and without qualm, send the .000457 BTC ($0.11 US) promised for the hour of surveys completed, videos watched, etc.]

In summary, at best Bitcoin is average or slightly below average in performance. Fees can be reasonably classified as excessive in many cases, meaning that there are significantly less expensive payment methods. Bitcoin does offer a cachet of technical savvy, perhaps offset by a perception of naivete about the real world. Anonymity is a myth to anyone who understands accounting. Scams and, to some degree justifiable, paranoia attach to it. Bitcoin would not seem to be a serious competitive currency.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Coming Soon: Goatcam!

May 12, 2015

I never imagined that I'd see myself writing this, but yes, Goatcam! is coming soon. I have a friend who started with a goat and added a variety of other farm-type animals to the menagerie, and I thought it would be an interesting technological challenge to set up Goatcam! to monitor it all live. The technical constraints made it interesting for me: it had to be free or nearly so, since Goatcam! is a fun project and not a serious commercial endeavor. That meant that it had to use existing or repurposed equipment and free or existing web services exclusively.

Goatcam! uses an old (now repurposed) Android device running over wifi. The Android device can use an app called IP Webcam (or IP Webcam Pro), which has various useful qualities including a comprehensive configuration interface, persistence on device reboots, local network wifi presence, and the ability to generate a still picture. The picture is processed on the back end in Linux, then sent to a Picasa album which is embedded in Goatcam! as a slideshow. This meets the needs of functionality (low bandwidth for a residential internet connection uploading content to a free service) and price point (in this case, nothing). A surprisingly consistent tendency among the non-technical is the belief that this sort of thing is, as a rule, free (it is, as a rule, not free). Being based on free products and services, I cannot guarantee permanency, but it will/does work now.

The technical side is essentially completed and is going through some final testing and adjustments. There are a few logistical details to be resolved both on the technical side, and on the, well, goat side. After that Goatcam! will be on the web. Watch this space for a link when it goes live!

Update: Goatcam! has had a minor setback in the timetable to going live, and is especially in need of a donated older Android device. If you have an older Android device sitting around and are willing to donate it to the cause, please contact me with the Contact link below. The Android device needed does not have very high requirements at all, but it does need the following minimum requirements to be useful:

- Phone or tablet, either is usable provided it can boot in wifi only mode

- Recent enough hardware/Android version to support video recording

- Video camera / video recording capability

- Bare minimum SD card (2 or 4GB), just enough to make any video configuration run smoothly

- No other advanced features required (the donated Android device will be dedicated to Goatcam!)

- Technically stable (no tendency to reboot, power off or lose charge spontaneously)

- Power cord required (the Android device will not be used unplugged)

If you have such a device laying about, don't know what to do with it, and are willing to donate it to the cause, please let me know. Goatcam! will thank you!

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Windows: Then and Now

April 17, 2015

Short post today. I was on Windows 7 earlier and it brought to mind a little joke from the Windows 95/98 days.

It seems there was a man flying a plane, he's almost out of fuel and has to land right away, but he can't find the airport. He sees a large office building, so he writes a note which reads “WHERE AM I?”, sticks it against the window and flies low past the office building. He loops around and flies past again and the office workers have written “YOU ARE IN A PLANE AT LOW ALTITUDE FLYING PAST OUR BUILDING.” Then the man knew he was at Microsoft and could find the airport from there.

The more things change...

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

Fun in the Sun: A Solar Powered Laptop

April 01, 2015

It's that time of year when the snow melts, Spring has sprung and people take themselves back outside after the long hibernation. In that spirit, here's an easy to build solar powered laptop charger designed to keep you computing when you're out and about.

Enjoy!

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

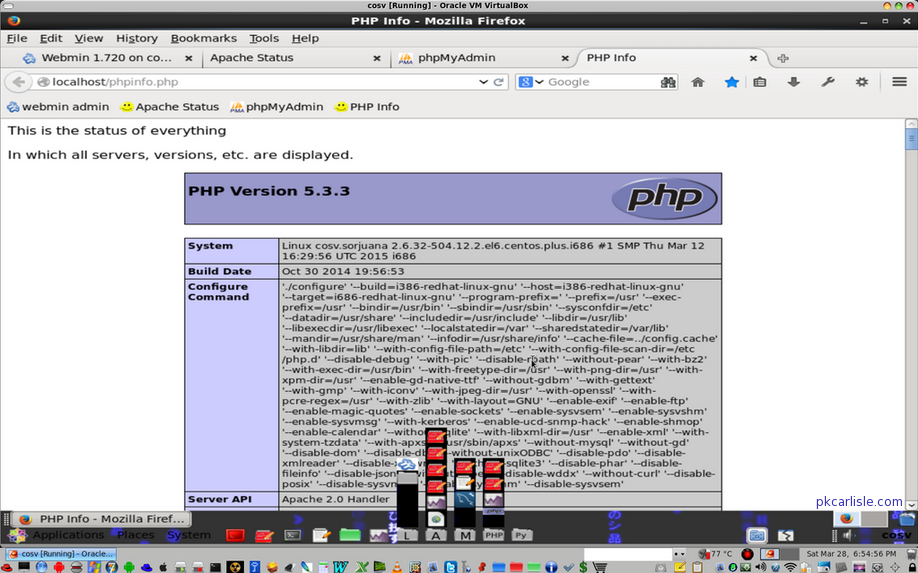

LAMP, the Linux and Everything

March 26, 2015

LAMP (Linux, Apache, MySQL, PHP) operates as a bundle. There are exceptions to be sure, Linux can be replaced by Windows or Mac. But if you want what would be considered a 'clean' install for development, LAMP with a Linux is the way it's spelled. I recently set up a LAMP stack, and this post is a response to that experience.

In fact, this post is a little bit of a rantlet, a small rant. Several problems were wholly avoidable and lay squarely at the feet of various Linux distros. My problem was that I had faith for too long and kept trying to make work what can only be described as a kludge. When I gave up on that approach, I had LAMP up and running in no time.

- All attempts were done in virtual machines (VM). This is not a bad idea if you do not have requirements which prevent it, and in fact should cause no problems as a rule. The host OS in all cases was CentOS 6 64-bit. The VM environment was VirtualBox.

- First I tried Fedora 20 (“Captain Comic Book”). I nicknamed it that since it seems to have veered toward something which is glossy, light, locks users out of things the OS feels users should not be accessing, and is generally inconvenient to use and no longer a serious Linux distro. Add to this limitation a peculiarity of certain Linux purists: that if the packagers feel that the 'true open source purity' of a piece of software is somehow compromised by a logo or license or corporate entanglement, that software may not be included in their Linux distribution in its original form.

This was the case with MySQL in Captain Comic Book. MySQL is apparently insufficiently pure to be included in the distro, and has been replaced (poorly) by something called maria. This replacement is poor in that it uses (some but not all) different folder names and file locations for some files, installed into a distro which would prefer that poor dumb users not access the system level files at all (and manifests that preference by making it awfully difficult and roundabout to do so).

Add to that what is in fact probably a bug in MySQL and not a Fedora issue: There are, at last count roughly 42,000 Google hits for a certain MySQL install error in a variety of Linux MySQL installations. Captain Comic Book did not cause this error, but it is fair to say that between its new philosophy of inaccessibility and purist hissy fits, Fedora 20 definitely exacerbated the problem significantly. After probably a total of 24 hours (on and off over the course of a week) trying to work around these limits, I dumped the Fedora 20 VM entirely and moved on.

- Next I tried Ubuntu 12.04 LTS. No, it's not the 'latest' version. But that LTS label stands for long term support and Ubuntu was up to date. That didn't bother me; what concerned me is that Ubuntu has also gone the way of dumbed-down Linux (although Ubuntu has always tended toward dumbed-down by default, so it wasn't as long a trip in Ubuntu's case). This time I only dedicated a couple of hours to attempting LAMP in this environment. In the end, the limitations of dumbed-down Linux (Gnome 3, fighting against access limitations even an alternate GUI cannot overcome, and the same MySQL error which essentially requires system level access to a degree Ubuntu resists) were too great to overcome. As with Fedora 20, Ubuntu also did not cause the MySQL error, but Ubuntu did render it essentially not resolvable.

- Last I tried CentOS 6 32-bit in a customized, stripped down developer-oriented VM. MySQL popped the same error as in Fedora 20 (“Captain Comic Book”) and Ubuntu. Fine, I had pretty much decided that the error was a MySQL issue in any case. However, here's the difference. CentOS has not messed around with folder names; MySQL is still MySQL. CentOS has not messed around with accessibility; root access is still root access. Therefore while MySQL installed in CentOS experienced the same exact error as with the other distros, I was able to fix it in around five minutes. Literally. Five minutes, and move on.

A couple of hours without a Linux distro resisting every inch of the way and the LAMP stack is customized and ready to work.

As I noted, LAMP (Linux, Apache, MySQL, PHP) operates as a bundle. If one component does not work as needed, none of it works. When the M has an error it does not matter what the cause is. If the L prevents fixing the M, the A and the P might as well not be there at all. Various dumbed-down Linux flavors are shooting themselves in the foot by rendering entire bundles like LAMP inoperative. That should be seen as a caution to those distro developers. It should also be seen as a caution to CentOS on where not to go as Gnome 2 support approaches end of life.

Closing thought. When making a complex construction like a LAMP stack, backing it up is like the Chicago ward boss said about voting: you can never do it too many times.

(Through 3 May 2015, the LAMP stack virtual machine is available at Amazon and eBay.)

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

A Dollar Donated via PayPal

March 14, 2015

A dollar donated via PayPal may possibly be a dollar lost.

Today's post is dedicated to all of those people who have provided exceptional content online, ask to get a small amount of recompense, deserve to receive it, and possibly never will. I have seen software applications, WordPress plugins, Mozilla plugins and blogs offering high quality items essentially for free, with the request, not a demand, that the user may, at their option, donate a little something to the project developers. Given the quality of some of these offerings, the users are getting an excellent deal in exchange for an optional donation.

But there are a couple of problems with the model. First understand what PayPal will permit the developer (blogger, etc.) to do. The developer may:

- Sign up to receive donations via PayPal,

- Actually receive donations in a PayPal account

Here is what the developer may not be able to do:

- Get paid. That is, withdraw actual spendable money from the PayPal account. Here's why:

To receive actual money received as a donation through PayPal (that is, not to have money lodged in a PayPal account, but actually to withdraw it, put it in your pocket and spend it) the recipient of a 'donation' must:

- be a legal, proper charity according to government rules (for example a 501(c)(3) non profit), or,

- eventually get PayPal's approval regarding how 'donated' funds will be spent.

The problems with PayPal donations, then are these:

- I am willing to wager that the vast majority of small developers who create a small software application or write a blog are not registered with the government as charities. These developers equate a 'donation' with the equivalent of a virtual tip jar. PayPal does not necessarily define a 'donation' the same way, AND,

- I am willing to wager that the vast majority of small developers will not become aware of this difference in definitions until PayPal asks them to provide their charity registration documents or explain how their donation schema correlates with the concept of charitable fundraising.

Four points remain to be considered. What to do about it, whether small developers have been cheated, why it's set up that way, and why I am bothering to write this up on my blog.

- What to do about the structure of donations versus payment? If using PayPal as the payment mechanism, change your PayPal payment type and website to accept payments and not donations if you are not a charity or collecting donations with charitable intent. Sorry, that's the way that it's done unless you explicitly have the mechanism in place to receive charitable donations. If an 'Add to Cart' or 'Buy Now' logo strikes a small developer as too commercial for what the small developer sees as a virtual tip, PayPal does permit the small developer (now technically a merchant) to upload a different button image instead.

Also, test your work by paying yourself a dollar or whatever amount you charge for your excellent project. Make sure the transaction registers as a payment and that you can get the actual withdrawal completed. Remember, simply receiving the money at PayPal (or in any other online account anywhere) is essentially meaningless; no one can ever truly be said to have been paid until the check clears.

- Have small developers, bloggers and the like been cheated by requesting donations through a structure which they may be unable to collect from? Oddly enough, no. The definition and responsibilities of a proper charity and of those accepting 'donations' is clearly set out in PayPal's terms of service.[1] I'm guessing the reason that small developers miss the difference in definition is that they equate the mental model of the tip jar with 'donations' and never read the fine print.

- Why does PayPal set up their system this way? I haven't a clue. I cannot speak for PayPal, I am not affiliated with PayPal in any way and cannot speak to, nor would I even want to guess or assume anything at all about PayPal policies or practices or motivation. I am neither a lawyer nor an accountant. I cannot and do not give legal or financial advice. However, I have worked with investments in a bank, and I can say why a theoretical Banco Philly might set up such a system:

With a small developer getting a few dozen or couple of hundred dollars a year in 'donations' it will be long time before the small developer discovers that they were not supposed to accept 'donations'.

Also, once the small developers do discover a difference of definitions, there would be still more time during which Banco Philly would graciously wait for the small developer to provide legal paperwork or other evidence of charitable intent (which Banco Philly knows the small developer likely can never produce).

During all of this time, Banco Philly will earn interest income on the small developer's money that it's holding. By itself, that's pocket change, but interest on a hundred dollars here and a couple of hundred dollars there, times a few million accounts, plus the time Banco Philly graciously waits for the small developer to provide legal paperwork or other evidence of charitable intent (which Banco Philly knows the small developer likely can never produce), can add up to massive interest income for Banco Philly.

That's called the float, the time between which money is received and paid out. Earning interest on money held during the float is perfectly legitimate, and that's how and why Banco Philly would do it.

- Why am I bothering to blog about this subject? I continue to see to this day, a lot of sincerely superb projects on the internet for which PayPal 'donations' are requested. I use a couple of those projects long term and have used others only for obscure technical tasks. Elements which some of these truly exceptional projects have had in common are:

their undeniable excellence,

the fact that the small developers want a small consideration for truly excellent work,

the unfortunate request for a 'donation' instead of a payment on the small developer's site.

This last may mean a small developer patiently watching a donation balance grow, only to discover that requesting a 'donation' violated a clearly stated policy and that the developer may receive nothing for their efforts. This blog post is my 'donation' to small developers everywhere who may have picked the wrong category for their PayPal account, who set out the virtual tip jar expecting some small well deserved consideration for excellence.

For the gal who wrote the wallpaper, for the guy who wrote the plugin, and the other one who had that truly awesome tweak for VirtualBox, and who all had a 'donation' option on their pages, and for thousands like them, this one's for you.

[1] PayPal. Donation Buttons. Retrieved March 13, 2015. https://www.paypal.com/us/cgi-bin/?cmd=_donate-intro-outside.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

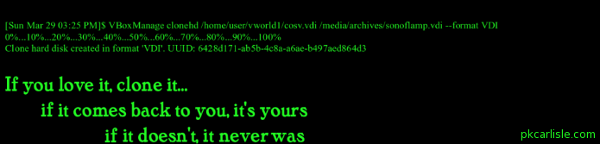

Tweons: Horribly Helpless Twitter Peons

March 12, 2015

This is, well, not the story, but another chapter in why social media outlets self destruct. It's happened before, it will doubtless happen again. In that sense, the story does not have a beginning and an end. It just goes on and on...

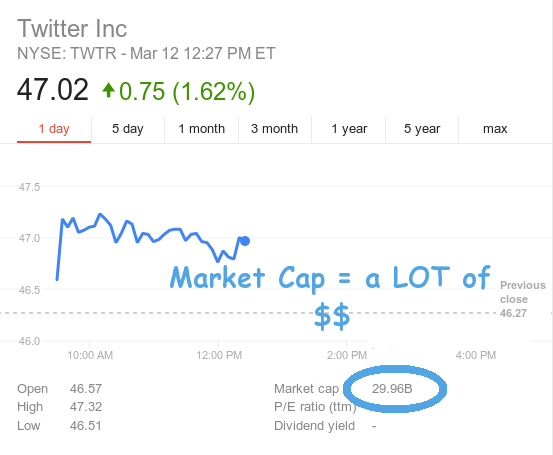

Today's chapter is about a $30 billion company called Twitter. That's billion, with a B. For perspective, Twitter could buy a stealth bomber and not even miss the cost.

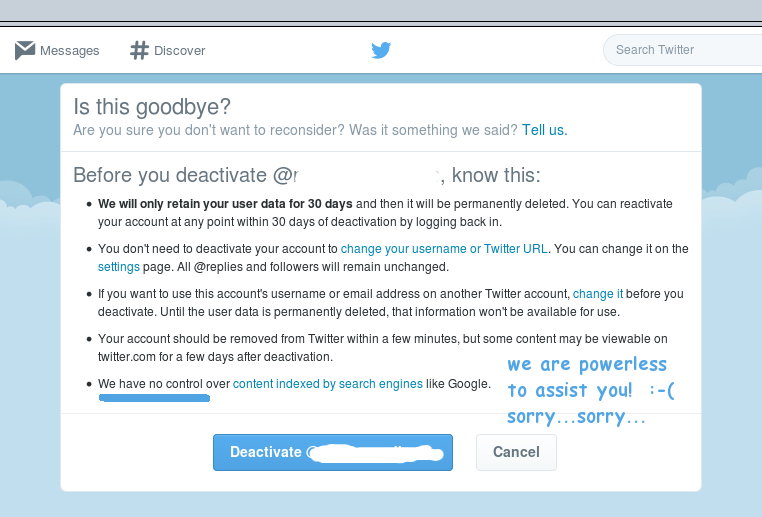

But Twitter, for all of its abundant dollars is helpless to assist its customers. They say it right here.

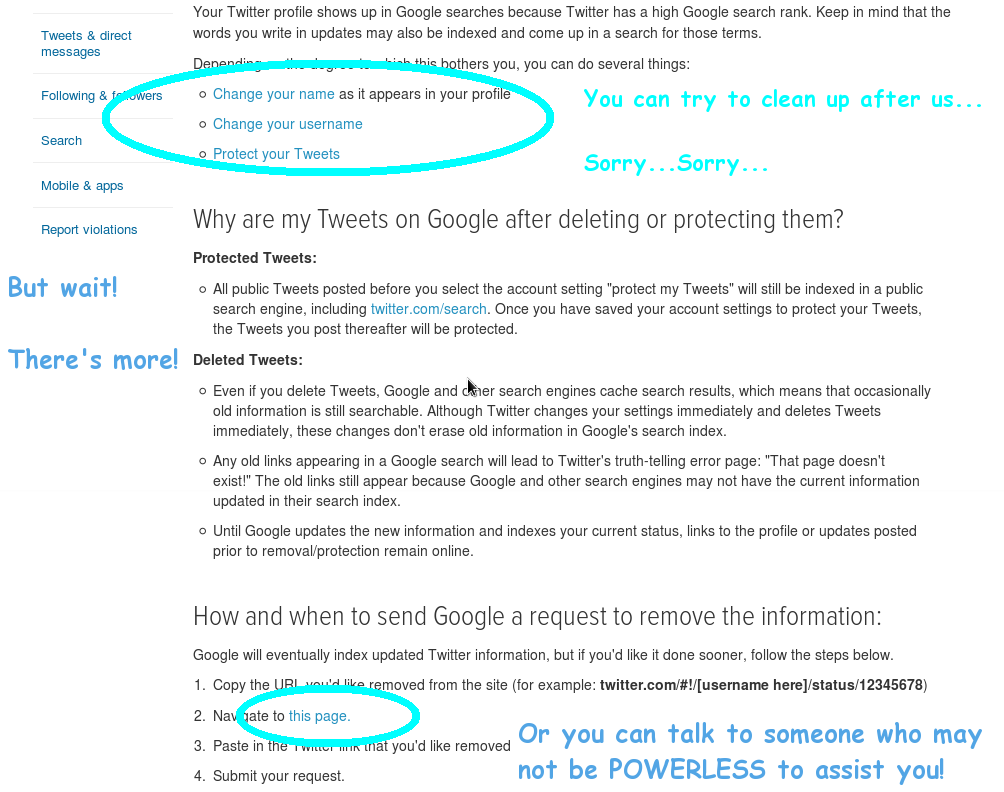

Yes, when it comes to allowing Google to post tweets as part of a Google search, Twitter appears to have fallen prey to that all too popular American business model, the helpless peon syndrome. Their options would be to make a meaningful effort to protect Twitter users and negotiate an opt out with Google, considerably more involved and a potentially expensive option, or to opt for policy by one-liner exemplified in the helpless peon syndrome: we can afford an air force larger than that of some countries, but “we have no control.”

A subtlety, an optional variant of the helpless peon syndrome, is to refer any customer you have no intention of helping to somewhere, anywhere, elsewhere as soon as possible. Blame anyone, everyone, someone else! One way Twitter leverages this subtlety is by linking to Google. Dealing with Google on a one-on-one basis is, as always, a difficult exercise (although they do have some relatively convincing bots responding to emails), but is also not really the point here. The point is the $30 billion company arguing a position of helplessness. Is that an argument you really want to win?

- To successfully argue helplessness is to argue helplessness.

Another quite popular variant is to blame the user. Play Behind the Iron Curtain, says Twitter. Change your user name and hope that you cannot be linked to existing content. Twitter itself is powerless to assist you. The point is that when a multi-billion dollar company tells its customers how anyone, everyone, someone else is responsible, it says something fundamental about the company's values and sense of worth they hold for their customers.

One of the biggest problems with the helpless peon customer service model is the tempting immediate success and eventual failure inherent in the model. The helpless peon model succeeds in that it brings fast, fast, relief. Unhappy customers go away. However, customers go away unhappy, and that is the long term flaw in the model.

Twitter is no exception to the rule. The helpless peon policy successfully sends customers away, undoubtedly true, but it sends them away unhappy. It logically follows that built into that policy model is the assumption that it is acceptable to have unhappy customers. In the end, it matters not at all if Twitter is to blame or not, Twitter ultimately assumes the responsibility for unhappy customers. As Facebook and My Space may attest, a social network accepts an unhappy customer model at its long term peril.

- To successfully avoid helping unhappy customers requires unhappy customers.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

WordPress Conversion - Episode III: A New Nope

March 9, 2015

Mail:

Mail. I got mail about the last blog post. Thought provoking comments, all. What the internet is supposed to be all about. I'll address some of the highlights here.

I got references to several SEO and monitor type tools. I have not assessed them yet, so I will not go into names of applications. For SEO and monitoring tools to be useful, it follows (or precedes, as is actually the case) that one must first develop a site worth deploying or monitoring. Since I have not produced anything in WordPress which would not make me cry for shame, deployment is out of the question at this point.

One response addressed my assertion that WordPress sites appeared 'cookie cutter' in appearance. I was working with WP theme Twentythirteen because it was so well commented, but tendency toward that theme may be the reason that WP sites overall seem to be so similar. I accept the reasoning, but that leads, in my opinion, to a conflict. If one theme tends to be a choice because it is well commented and therefore more readily comprehensible, how does one justify using a theme which is not well commented? Or does one even justify it at all? There were three tangents to the response which addressed the conundrum with (again, my opinion) varying degrees of efficacy.

The first option was to accept the cookie cutter appearance if the commenting of the theme was so important to the ability to design that it made a critical difference. I accept the logic, but personally feel that if the result is a similarity of sites to the degree that a non-WordPress user can visually identify WP sites, that might make WP a lot less attractive in the long term. Still, it's a working option, so, noted.

The next option was to learn WP and accept that code in WP templates will not be commented. The argument goes that comments in code must be loaded as a web site loads. Therefore the comments slow down the loading of the site every time that a comment exists. Also, real professionals do not comment code; in fact that's how to identify the code as the product of a professional. I have a couple of responses to these arguments.

State of the Code:

For one, I have done some coding, and I hate to comment it. It works as coded, so what's the problem? The problem is exactly what I am addressing in trying to work with WP templates. The developer designs the theme, hands it off to someone else (myself, the developer of the specific site) who in turn finds it difficult to use because the code is not commented. Without comments in the code, using the code which is handed off means that what should by all rights be a simple process becomes a bizarre ritual.

Sorry, we're going to have to agree to disagree on this one. If you code it, comment it. If you don't comment it, it logically would not and should not be used as often as well commented code, especially when a theme is designed to be a template, is designed with the explicit understanding that it is to be further modified. As for the argument about website load times increasing from having to load pages including full comments, I don't buy it. You can run Netflix inside of Firefox inside of Windows inside of VirtualBox inside of enterprise Linux and still watch a movie. A medium sized JPEG graphic is in the 25-50K range. Bandwidth, processing and memory are sufficient these days that loading 5 or even 10K of extra code which includes comments won't even be noticed.

And if the concern about comments remains, by all means, write a script to cleanse pages of comments when ready for deployment. But don't stick a template with 10,000+ lines of code online, 5% of which is commented, and wonder why it's not useful. Last but not least, there's the option used by Twentythirteen: name your theme's variables something rational compared to what the variables do. That lowers code which must be loaded on the user's browser and still leaves a usable theme for website developers.

The argument that commenting code is passe, that the need for code comments reflects the ignorance of the web site creator and not a fundamental flaw in the code itself is lovely, wholly, robustly, modern American. You have two choices: Comment your code properly (a lot of work), or, take offense that someone would be offended, tweet it thereby making it real, and go have a latte (a lot less work, and the choice 4 out of 5 Americans recommend most).

Meanwhile, here is the realistic state of the code comments: Thousands of blog entries exist, each addressing a particular snippet of code as someone discovered and resolved the effect of that single specific uncommented code snippet. The very fact that there are thousands of individual pages from thousands of individual users addressing thousands of individual code snippets should indicate that there is a fundamental flaw in the product, when thousands of separate pages exist in no rational order, essentially writing the documentation piecemeal which should properly exist in the first place.

You've Been Here Before:

I would like to pretend that I am not shouting into a hurricane with my observation, but I realize that I probably am. Take Linux and Python as examples. Both are lovely examples of what they do. Both have, to put it charitably, substandard docs (again, applying my definition: that tens of thousands of piecemeal blog entries dealing with heretofore undocumented or poorly documented functions, documented and posted independently by thousands of individual bloggers as they are discovered and figured out does not equal quality documentation).

WordPress is unfortunately technically in the same situation, and in fact the situation is worse. WP is every bit as poorly (but not necessarily more poorly) documented, true, but WP now has precedence. WP can say, 'Look at Linux, look at Python. Whomping out 10,000+ lines of uncommented code with cryptic variable names or poorly described functions is perfectly acceptable, it's the end user's fault, I'm offended that you do not see the Christlike perfection of the project, and that's a tall skim latte.'

WordPress Frameworks:

Another mail comment was to seek out a WordPress Framework. Being experimental at this point, I looked into free options. The comment which I received was along the lines that with such a tool, it would not even be necessary to touch code. Awesome. I looked for a Framework. Now I may be using them wrong, but these Frameworks are essentially just themes. They have a default appearance for your page (kind of a cross between Microsoft and a coloring book in appearance) and thousands of uncommented lines of code, documented piecemeal in thousands of blogs, etc., etc. In fairness, the framework/theme I have played with the most does add one (and only one) 'codeless' feature to the dashboard to disable the otherwise exceptionally well hidden “Proudly created in WordPress” blurb (which, by this point, is in itself no bad thing). Otherwise, the framework is just the same as any other theme: accept a cookie cutter design or stumble through thousands of undocumented lines, blah, blah, blah.

One Approach:

My approach to this attempt at WordPress conversion is to go into these themes and disable as many options as possible. Let's look at the options realistically.

Option 1:

- Spend 5-10 minutes Google searching various blog posts for how to do task a. Since these blog posts are volunteer efforts at documentation and narrowly tailored to resolving one specific problem in one specific version of one specific theme, maybe it is similar to what I am looking to adjust, maybe not. Dunno. So, read multiple blog posts.

- Spend five more minutes of my limited time on this planet figuring out which file the code snippet belongs in, edit and save the code.

- Reload web page to check results.

- If not successful, remove code changes and save code.

- Repeat Option 1. Possibly for hours.

Option 2:

- Rather than customizing (say, a menu), figure on removing it.

- Similar to Option 1 in operation, but generally requires fewer guesses, fails, reverts and subsequent searches. And hours.

The problem with Option 2 is of course, the WP theme becomes so limited in functionality that it may as well be HTML. All of the undocumented features are simply disabled. So why not simply do HTML and be done with it? I am not exactly sure why not, and that is the beginning of deciding that WordPress just may not be worth the bother. However, I'll keep plugging away at it awhile yet, not because I am sure at this point that WordPress has something to offer, but more as a matter of will.

To Be Continued:

As a closing thought for this episode, there is another factor which must honestly be considered regarding the utility of WordPress, especially with regard to disabling features. All too many of the newly revamped WordPress pages I am seeing use the WordPress equivalent of pop-ups. I'm not sure what the WP terminology is for these sliding, fading panels, and it's not really important at this point.

What is important, is that these are, label notwithstanding, pop-ups (of a variety which browser pop-up blockers have yet to block). So, then, the advanced WP features (and the reason that I should want to use WordPress?) is to enforce on my site the very annoyances which make me leave other websites when I encounter them? The annoyances which, in a different web technology model, have long since been addressed? It does not really matter whether it is labeled as a pop-up or a Persistent Interactive Sliding System Engaging Multiple Optional Fill-in Fields (PISSEMOFF) or a User Parameter Yielding Objective Usage Research Statistics (UPYOURS), it is a pop-up by any other name, and it is as annoying today as ever it was.

Share this on ![]() witter

or

witter

or ![]() acebook.

acebook.

WordPress Conversion - Continued